Are you planning to pilot or already implementing Generative AI into your marketing stack? You’re not alone. According toGartner 63% of marketing leaders plan to invest in Generative AI in the next 24 months.

When introducing AI solutions to your marketing stack, remember to establish clear KPIs to measure their effectiveness in your organization. Return on Investment (ROI), Total Cost of Ownership (TCO), Payback Period, and Adoption Rates are common metrics used for software investments.

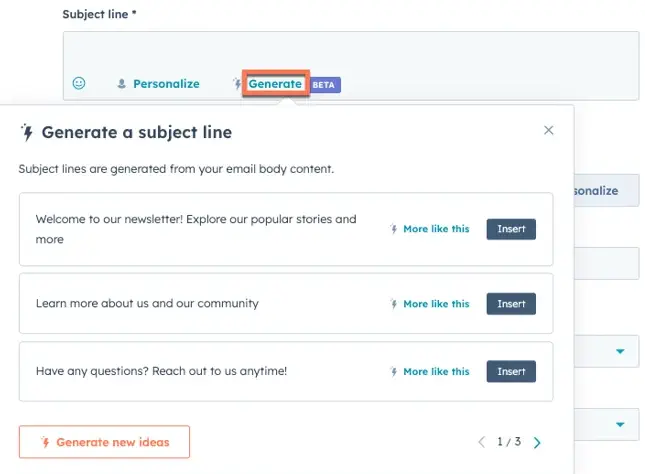

Let's explore KPIs more specific to AI pilots and projects. I’ll use an example use case of employing a Generative AI solution to create different variants of subject lines for a marketing email.

Example AI subject line generation functionality in Hubspot:

Source: Hubspot Blog

Human-in-the-loop metrics (HITL)

Gen AI solutions can indeed reduce human involvement in various processes. However, in most cases, experts in the relevant business domain must be involved to properly set up, train, or fine-tune the AI solution to achieve high-quality results after some time. Even when the Gen AI solution is deployed, continuous monitoring is necessary to ensure the generated content maintains an acceptable level of quality.

Human-in-the-loop metrics measure the level of human involvement required for a piloted or implemented use case.

KPIs:

- Man-hours / days spent on solution setup prompt preparation, and feedback loops.

In our example use case, we’d assess and measure:

- Does the piloted solution provide a way to customize the prompt used for the subject line generation - e.g. to set a certain tone, keywords to use or to avoid? If yes, how long does it take to set it up?

- Is there an easy way to aggregate & review the quality of the generated subject lines in the long run?

- What are the reporting and data visualization capabilities of the solution that come out-of-the-box?

Scalability

While applicable to any software, assessing scalability for AI solutions is often overlooked, especially by business stakeholders. Even when Generative AI solutions appear impressive at the individual prompt level, the crucial question remains: how will they perform at scale?

KPIs:

- Performance metrics under varying workloads (stress, load, endurance tests)

- Associated resource utilization

- Estimated cost of expanding the solution for a new use case

In the subject line generation example we could ask ourselves the following:

- How long does it take to generate 3, 10, and 100 variants of a subject line from one version of a 100-word email body?

- How does the solution behave if we want to send out 10 versions of an email, each with 10 different variants of a generated subject line?

- If we started the campaign now, when will the last message be sent out?

- Does the number of generated variants impact the monthly bill we receive from the tool vendor? If yes, by how much?

- If the solution is self-hosted, what are the underlying infrastructure costs? How do they change once we start using the Gen AI features?

- How much time and effort would it take to expand the use case from emails alone to also start generating push notifications?

Error & correction rate

These metrics evaluate the rate at which the Generative AI solution produces incorrect or undesirable outputs. It is closely tied to human-in-the-loop metrics but focuses solely on errors and the required corrections.

KPIs:

- Error rate before and after human review

- Correction rates

- Types of errors corrected

Returning to our example use case:

- What criteria will we use to classify an error in subject line generation? It's crucial to set clear rules that the generated output must adhere to, such as the required length of the subject line (e.g., between 3 and 7 words) or a list of prohibited words.

- What percentage of generated subject lines were corrected by a domain expert?

- Levenshtein Distance can be used to measure the average difference between the generated subject line and its corrected version. The lower it is, the higher the quality of the Gen AI solution.

- How does it change over time?

- Are there any email templates or content types that the solution consistently produces lower-quality subject line variants for?

Integration success

Assess how well does the Generative AI solution integrate with existing systems, workflows, and processes. Consider not only the systems needed for integration today but also how it aligns with your internal systems roadmap and its coexistence with planned future developments.

KPIs:

- Integration time & cost

- Method & perceived ease of integration

- Impact on existing systems and workflows

For our subject line generation use case:

- Is the subject line generation feature readily available in our marketing automation system out-of-the-box, or do we need to integrate it with another piece of software specifically for that purpose?

- If integration is required, what interfaces are supported? How well-documented are they?

- How easily does it integrate with the Data Platform used by our organization?

- How does it fit into our wider MarTech stack strategy? If it is delivered in a SaaS model, is the pricing consistent with the other tools we use for Marketing Automation?

Feedback loop effectiveness

Establish an effective feedback loop for continuous improvement based on user feedback and system performance. This one is crucial at the early stages of Generative AI pilots and implementation.

KPIs:

- Feedback response time,

- Time & cost of incorporation of feedback into model updates

- Iterative improvement metrics

Lastly, the questions to answer in our example use case are:

- How does the piloted solution allow for feedback collection? Do we have a mechanism to provide feedback indicating that a given generated subject line is better than others?

- How long does it take to regenerate the subject line after providing feedback?

- Can email open & click-through rates be used to assess the quality of the subject lines?

- Can these metrics be automatically fed back into the AI model to continuously enhance its performance?

Summary

Having these KPIs incorporated into the overall measures of the Gen AI Pilots or implementation projects makes assessing its performance much more objective. Additionally, they may also help in finding out the inefficiencies within existing processes or systems that would hurt the potential benefits of wide AI rollout.